Dataset¶

- Brats2018 Dataset

- 210 High Grade Glioma

- 75 High Grade Glioma

- Each Image Dimention (240, 240,155)

- T1, T2, Flair, T1c Images

- Whole, Core, Eduma Ground Truth

In [0]:

#@title

plt.figure(figsize=(15,10))

plt.subplot(241)

plt.title('T1')

plt.axis('off')

plt.imshow(T1[90, 0, :, :],cmap='gray')

plt.subplot(242)

plt.title('T2')

plt.axis('off')

plt.imshow(T2[90, 0, :, :],cmap='gray')

plt.subplot(243)

plt.title('Flair')

plt.axis('off')

plt.imshow(Flair[90, 0, :, :],cmap='gray')

plt.subplot(244)

plt.title('T1c')

plt.axis('off')

plt.imshow(T1c[90, 0, :, :],cmap='gray')

plt.subplot(245)

plt.title('Ground Truth(Full)')

plt.axis('off')

plt.imshow(Label_full[90, 0, :, :],cmap='gray')

plt.subplot(246)

plt.title('Ground Truth(Core)')

plt.axis('off')

plt.imshow(Label_core[90, 0, :, :],cmap='gray')

plt.subplot(247)

plt.title('Ground Truth(ET)')

plt.axis('off')

plt.imshow(Label_ET[90, 0, :, :],cmap='gray')

plt.subplot(248)

plt.title('Ground Truth(All)')

plt.axis('off')

plt.imshow(Label_all[90, 0, :, :],cmap='gray')

plt.show()

Preprocessing¶

- Histogram Equlization

- Normalization to Mean and Std of Each Slice

- Normalization to Mean and Std of Brain Area

In [0]:

#@title

count = 150

pul_seq = 'flair'

Flair = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*{}.nii.gz'.format(pul_seq), count, label=False,hist_equ = True)

pul_seq = 't1ce'

T1c = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*{}.nii.gz'.format(pul_seq), count, label=False,hist_equ = True)

pul_seq = 't1'

T1 = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*{}.nii.gz'.format(pul_seq), count, label=False,hist_equ = True)

pul_seq = 't2'

T2 = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*{}.nii.gz'.format(pul_seq), count, label=False,hist_equ = True)

label_num = 5

Label_full = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*seg.nii.gz', count, label=True,hist_equ = True)

label_num = 2

Label_core = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*seg.nii.gz', count, label=True,hist_equ = True)

label_num = 4

Label_ET = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*seg.nii.gz', count, label=True,hist_equ = True)

label_num = 3

Label_all = create_data_onesubject_val('/content/drive/My Drive/Colab Notebooks/2018/HGG/', '**/*seg.nii.gz', count, label=True,hist_equ = True)

#@title

plt.figure(figsize=(15,10))

plt.subplot(241)

plt.title('T1')

plt.axis('off')

plt.imshow(T1[90, 0, :, :],cmap='gray')

plt.subplot(242)

plt.title('T2')

plt.axis('off')

plt.imshow(T2[90, 0, :, :],cmap='gray')

plt.subplot(243)

plt.title('Flair')

plt.axis('off')

plt.imshow(Flair[90, 0, :, :],cmap='gray')

plt.subplot(244)

plt.title('T1c')

plt.axis('off')

plt.imshow(T1c[90, 0, :, :],cmap='gray')

plt.subplot(245)

plt.title('Ground Truth(Full)')

plt.axis('off')

plt.imshow(Label_full[90, 0, :, :],cmap='gray')

plt.subplot(246)

plt.title('Ground Truth(Core)')

plt.axis('off')

plt.imshow(Label_core[90, 0, :, :],cmap='gray')

plt.subplot(247)

plt.title('Ground Truth(ET)')

plt.axis('off')

plt.imshow(Label_ET[90, 0, :, :],cmap='gray')

plt.subplot(248)

plt.title('Ground Truth(All)')

plt.axis('off')

plt.imshow(Label_all[90, 0, :, :],cmap='gray')

plt.show()

In [0]:

#@title

plt.figure(figsize=(15,10))

plt.subplot(241)

plt.title('Flair')

plt.axis('off')

plt.imshow(Flair[90, 0, :, :],cmap='gray')

plt.subplot(242)

plt.title('T1c')

plt.axis('off')

plt.imshow(Flair[91, 0, :, :],cmap='gray')

plt.subplot(245)

plt.title('Ground Truth(Full)')

plt.axis('off')

plt.imshow(label[90, 0, :, :],cmap='gray')

plt.subplot(246)

plt.title('Ground Truth(Full)')

plt.axis('off')

plt.imshow(label[91, 0, :, :],cmap='gray')

plt.show()

Advance Methods¶

- Elastic Deformation

In [0]:

#@title

plt.figure(figsize=(15,10))

plt.subplot(241)

plt.title('Flair')

plt.axis('off')

plt.imshow(Flair[90, 0, :, :],cmap='gray')

plt.subplot(242)

plt.title('T1c')

plt.axis('off')

plt.imshow(Flair[91, 0, :, :],cmap='gray')

plt.subplot(245)

plt.title('Ground Truth(Full)')

plt.axis('off')

plt.imshow(label[90, 0, :, :],cmap='gray')

plt.subplot(246)

plt.title('Ground Truth(Full)')

plt.axis('off')

plt.imshow(label[91, 0, :, :],cmap='gray')

plt.show()

In [0]:

#@title

def dice_coef(y_true, y_pred):

y_true_f = K.flatten(y_true)

y_pred_f = K.flatten(y_pred)

intersection = K.sum(y_true_f * y_pred_f)

return (2. * intersection + smooth) / (K.sum(y_true_f) + K.sum(y_pred_f) + smooth)

def dice_coef_loss(y_true, y_pred):

return -dice_coef(y_true, y_pred)

def unet_model():

inputs = Input((1, img_size, img_size))

conv1 = Conv2D(64, (3, 3), activation='relu', padding='same') (inputs)

# batch1 = (conv1)

batch1 = BatchNormalization(axis=3)(conv1)

conv1 = Conv2D(64, (3, 3), activation='relu', padding='same') (batch1)

batch1 = BatchNormalization(axis=3)(conv1)

pool1 = MaxPooling2D((2, 2)) (batch1)

pool1 = Dropout(dropout*0.5)(pool1)

conv2 = Conv2D(128, (3, 3), activation='relu', padding='same') (pool1)

batch2 = BatchNormalization(axis=3)(conv2)

# batch2 = BatchNormalization(axis=3)(conv2)

conv2 = Conv2D(128, (3, 3), activation='relu', padding='same') (batch2)

batch2 = BatchNormalization(axis=3)(conv2)

pool2 = MaxPooling2D((2, 2)) (batch2)

pool2 = Dropout(dropout)(pool2)

conv3 = Conv2D(256, (3, 3), activation='relu', padding='same') (pool2)

batch3 = BatchNormalization(axis=3)(conv3)

conv3 = Conv2D(256, (3, 3), activation='relu', padding='same') (batch3)

batch3 = BatchNormalization(axis=3)(conv3)

pool3 = MaxPooling2D((2, 2)) (batch3)

pool3 = Dropout(dropout)(pool3)

conv4 = Conv2D(512, (3, 3), activation='relu', padding='same') (pool3)

batch4 = BatchNormalization(axis=3)(conv4)

conv4 = Conv2D(512, (3, 3), activation='relu', padding='same') (batch4)

batch4 = BatchNormalization(axis=3)(conv4)

pool4 = MaxPooling2D(pool_size=(2, 2)) (batch4)

pool4 = Dropout(dropout)(pool4)

#

conv5 = Conv2D(1024, (3, 3), activation='relu', padding='same') (pool4)

batch5 = BatchNormalization(axis=3)(conv5)

conv5 = Conv2D(1024, (3, 3), activation='relu', padding='same') (batch5)

batch5 = BatchNormalization(axis=3)(conv5)

up6 = Conv2DTranspose(512, (2, 2), strides=(2, 2), padding='same') (batch5)

up6 = concatenate([up6, conv4], axis=1)

up6 = Dropout(dropout)(up6)

conv6 = Conv2D(512, (3, 3), activation='relu', padding='same') (up6)

batch6 = BatchNormalization(axis=3)(conv6)

conv6 = Conv2D(512, (3, 3), activation='relu', padding='same') (batch6)

batch6 = BatchNormalization(axis=3)(conv6)

up7 = Conv2DTranspose(256, (2, 2), strides=(2, 2), padding='same') (batch6)

up7 = concatenate([up7, conv3], axis=1)

up7 = Dropout(dropout)(up7)

conv7 = Conv2D(256, (3, 3), activation='relu', padding='same') (up7)

batch7 = BatchNormalization(axis=3)(conv7)

conv7 = Conv2D(256, (3, 3), activation='relu', padding='same') (batch7)

batch7 = BatchNormalization(axis=3)(conv7)

up8 = Conv2DTranspose(128, (2, 2), strides=(2, 2), padding='same') (batch7)

up8 = concatenate([up8, conv2], axis=1)

up8 = Dropout(dropout)(up8)

conv8 = Conv2D(128, (3, 3), activation='relu', padding='same') (up8)

batch8 = BatchNormalization(axis=3)(conv8)

conv8 = Conv2D(128, (3, 3), activation='relu', padding='same') (batch8)

batch8 = BatchNormalization(axis=3)(conv8)

up9 = Conv2DTranspose(64, (2, 2), strides=(2, 2), padding='same') (batch8)

up9 = concatenate([up9, conv1], axis=1)

up9 = Dropout(dropout)(up9)

conv9 = Conv2D(64, (3, 3), activation='relu', padding='same') (up9)

batch9 = BatchNormalization(axis=3)(conv9)

conv9 = Conv2D(64, (3, 3), activation='relu', padding='same') (batch9)

batch9 = BatchNormalization(axis=3)(conv9)

conv10 = Conv2D(1, (1, 1), activation='sigmoid')(batch9)

model = Model(inputs=[inputs], outputs=[conv10])

model.compile(optimizer=Adam(lr=1e-5), loss=dice_coef_loss, metrics=['accuracy', dice_coef])

return model

m = unet_model()

m.summary()

# from keras.utils import plot_model

# plot_model(m, to_file='model.png')

In [0]:

#@title

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

SVG(model_to_dot(m).create(prog='dot', format='svg'))

Out[0]:

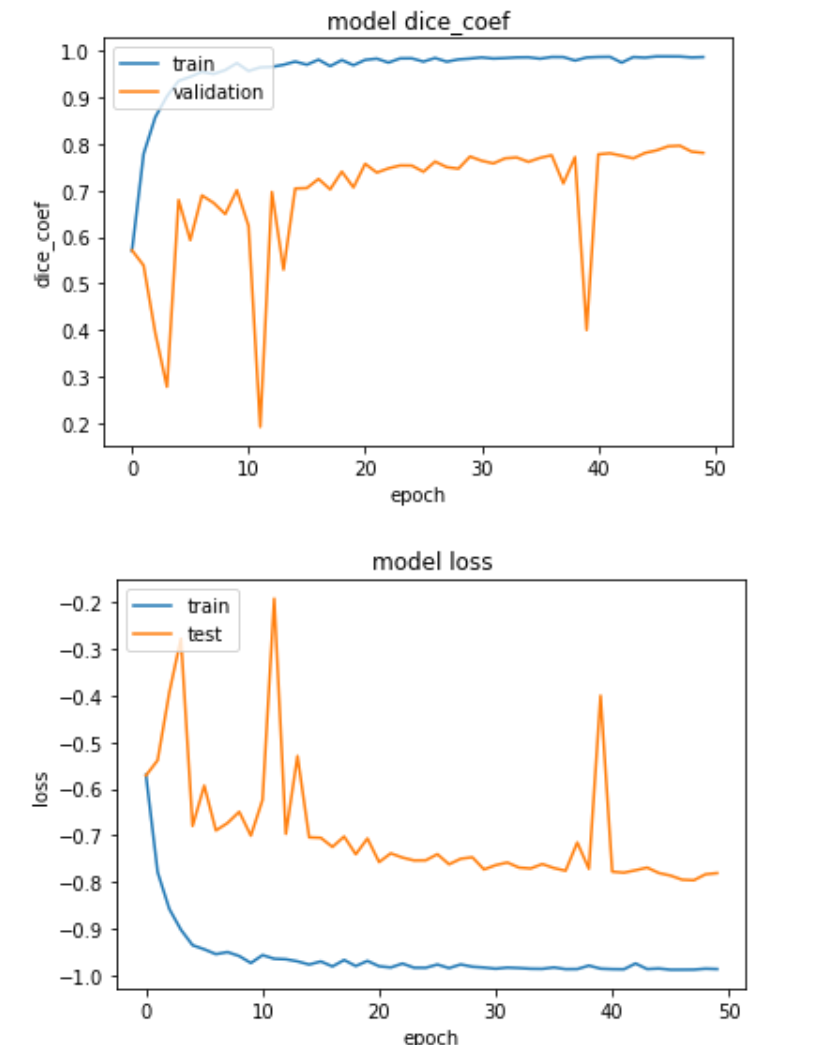

First Experiment¶

- BatchNormalization

- No dropont

- Simple Augmentation

- Dice Coefficient as Loss

- Normalization to Mean and Std of Each Slice

- Without Histogram Equlizer

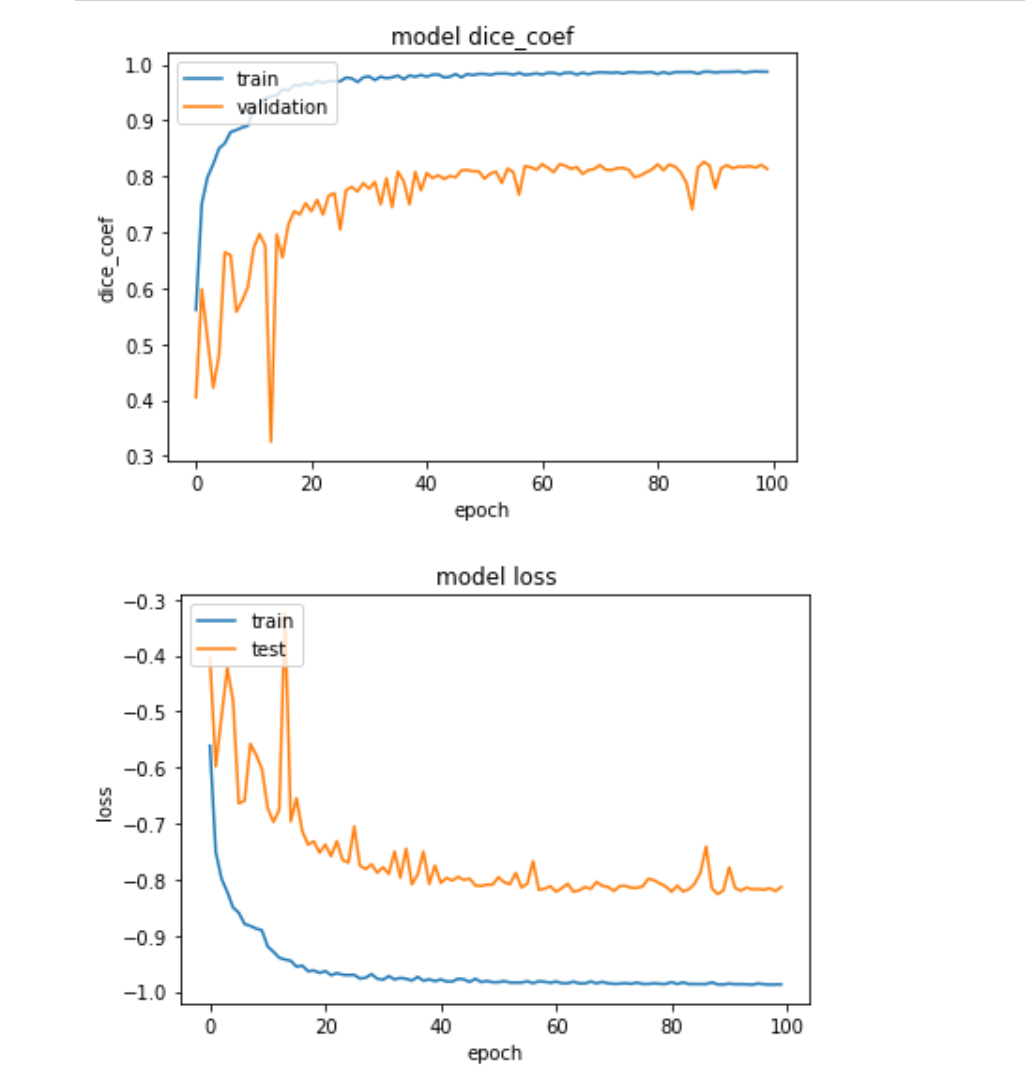

Second Experiment¶

- BatchNormalization

- Dropont 0.1

- Simple Augmentation

- Dice Coefficient as Loss

- Normalization to Mean and Std of Each Slice

- Without Histogram Equlizer

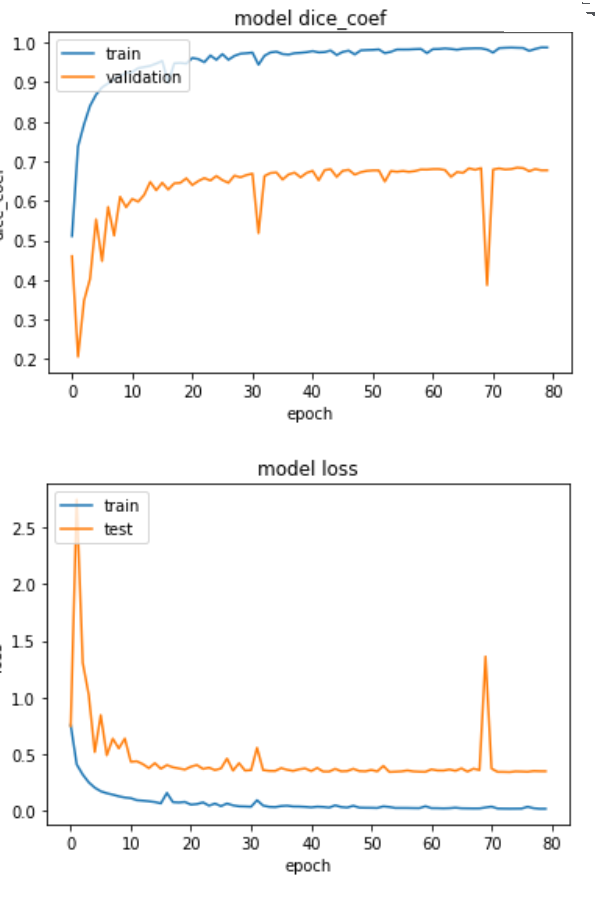

Third Experiment¶

- BatchNormalization

- Dropont 0.2

- Simple Augmentation

- Dice Coefficient as Loss

- Normalization to Mean and Std of Brain Area

- Without Histogram Equlizer

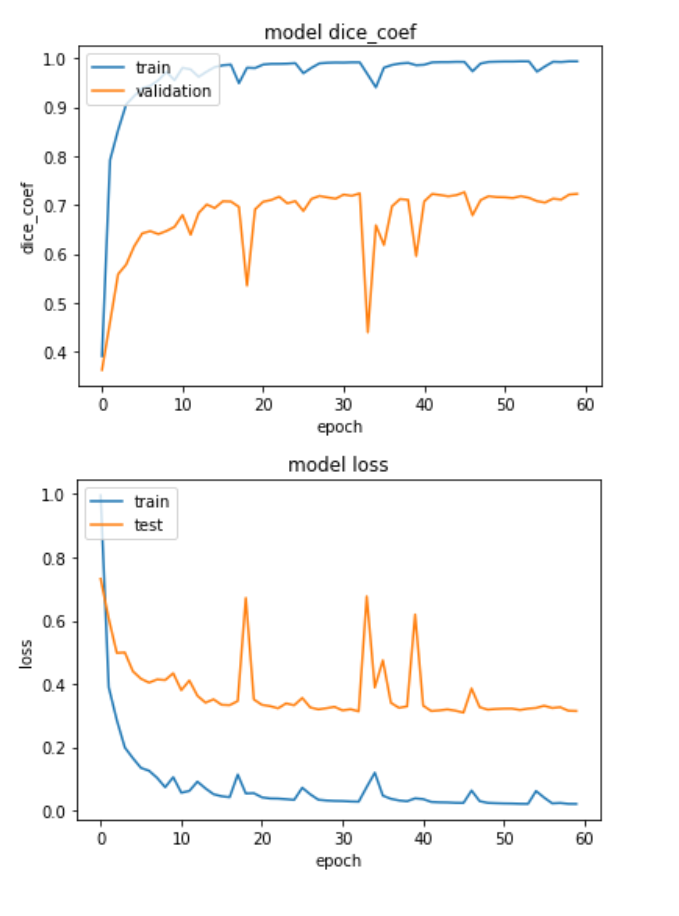

Sixth Experiment¶

- Regularization L2

- Activation LeakyRelu

- Dropont 0.2

- Simple Augmentation & Elastic Deformation

- Dice Coefficient + Cross Entropy as Loss

- Normalization to Mean and Std of Brain Area

- Without Histogram Equlizer

In [0]:

#@title

m = unet_model()

m.summary()

In [0]:

#@title

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

SVG(model_to_dot(m).create(prog='dot', format='svg'))

Out[0]: